Delving the depths of computing,

hoping not to get eaten by a wumpus

By Timm Murray

2024-03-30

Big new battery breakthroughs often come with headlines proclaiming ten or

even five minute charging times for EVs that can go 600 miles on a full

charge. Batteries are not the full story. These charge times would require a

whole new plug design, and likely entirely new transformers to power the charge

banks. There are bottlenecks besides batteries.

Let’s take the Tesla Model Y’s standard size battery of 60 kWh. Let’s say it’s

at 10% charge (6 kWh) and we want to go all the way to 100%. That means we need

to feed it 54 kWh (assuming no efficiency loss), which can just mean giving it

54 kW over the course of 60 minutes. To do the same in 5 minutes, we have to

feed it 650 kW.

Note that this isn’t even the biggest battery out there right now. It’s a

modest sized car with a good enough battery.

An SAE J3400 plug (the standard being developed from Tesla’s plug) supports

up to 1,000VDC and 650A of current. This means it has a max capacity of

650 kW; any efficiency loss at all will exceed the limit above. CCS

plugs only support 350 kW. Japanese CHAdeMO plugs can go up to 900 kW, but

they are rare outside of Japan.

Meanwhile, the transformers being built out for current EV infrastructure

won’t even hit that much. Tesla V4 Superchargers are also only designed

up to 250 kW. So the plug can’t support it, and that isn’t even the biggest

bottleneck.

Even if the chargers and plug were redesigned, you’re not likely to see

many of them for several years.

You can play with the numbers–a larger or smaller battery, or only going to

80% instead of 100%, and calculating in some efficiency loss–but the results

are always between “barely possible” and “not going to work”.

We could design a whole new plug that supports higher charge rates and deploy

even beefier transformers, but why? Aiming for 20 minute charge times for

250 mile range is generally more than enough even when cold weather chops

20% or even 40% off this range. That gets you 2-4 hours of driving, which

is about when you should be getting out to stretch, anyway.

How much are these 600 mile range EVs going to weigh? Why not keep them at

around 250 miles and use new battery tech to reduce their weight?

Instead of looking for absurd ranges and charge times, focus on what

people are going to do with those 20 minutes. Many of the L3 chargers you’d

use on road trips right now are in parking lots for Walmart, dealerships, or

places that are equally uninteresting and unappealing. How about a nice place

to sit down, get on wifi, and grab a cup of coffee? Or just walk around in

something other than a parking lot?

This whole problem can go away by providing a nice experience while people

are waiting.

EV charge times look a lot better if they’re combined with walkable cities.

2024-03-20

Here’s some strawman reasons that Moore’s Law is dead:

-

Photoshop doesn’t edit twice as fast every 18 months

-

GTA V framerate doesn’t double every 18 months

-

Frequency doesn’t double every 18 months

-

Hard drive capacity doesn’t double every 18 months

-

My networth doesn’t double every 18 months

None of these are correct for the simple reason that Moore never made these

claims. However, Moore’s Law is dead for completely different reasons that

nobody mentions because they haven’t read the original paper. So let’s

all do that, and keep in mind that Moore was writing before any human landed on

the Moon.

https://hasler.ece.gatech.edu/Published_papers/Technology_overview/gordon_moore_1965_article.pdf

Here’s the key quote:

The complexity for minimum component costs has increased at a rate of roughly a factor of two per year

The version of the paper above has additional material from 40 years on, and

clarifies the above:

So the original one was doubling every year in complexity now in 1975, I had to go back and revisit this… and I noticed we were losing one of the key factors that let us make this remarkable rate of progress… and it was one that was contributing about half of the advances were making.

So then I changed it to looking forward, we’d only be doubling every couple of years, and that was really the two predictions I made. Now the one that gets quoted is doubling every 18 months… I think it was Dave House, who used to work here at Intel, did that, he decided that the complexity was doubling every two years and the transistors were getting faster, that computer performance was going to double every 18 months… but that’s what got on Intel’s Website… and everything else. I never said 18 months that’s the way it often gets quoted.

Not only that, but Moore states that he was working with only a few datapoints

at the time, and expected things to continue for a few more years. He never

would have guessed back then that we’d be pushing it for decades.

So I looked at what we were doing in integrated circuits at that time, and we made a few circuits and gotten up to 30 circuits on the most complex chips that were out there in the laboratory, we were working on with about 60, and I looked and said gee in fact from the days of the original planar transistor, which was 1959, we had about doubled every year the amount of components we could put on a chip. So I took that first few points, up to 60 components on a chip in 1965 and blindly extrapolated for about 10 years and said okay, in 1975 we’ll have about 60 thousand components on a chip

The idea of 60,000 components on a chip probably sounded like huge progress at

the time. That would drive a revolution, and it did. But keep going for another

few decades? There was no expectation that it would, and he’d have been insane

to suggest it. He was extrapolating from about 6 years of data, and it needed to

be revised 10 years later.

Look carefully at that original claim: “The complexity for minimum

component costs has increased at a rate of roughly a factor of two per year”.

We’ll substitute in 18 months instead of a year. Now, it’s not that things will

double in speed every 2 years. It’s not that frequency will double every 18

months. It’s that the cost per integrated component will be cut in half every

18 months.

Let’s do some extrapolation. The Intel 8008 chip was released in April 1972 with

3,500 transistors for $120 ($906 for inflation to 2024). There have been

622 months since then, which gives us 34.6 doublings. We would therefore

expect a chip to have 3500 * 2^34.6 transistors, or about 90 trillion.

Absolutely nothing exists in that size. The largest currently released

processor is the AMD Instinct MI300A with 0.146 trillion transistors.

Let’s be more generous and go with doubling every 2 years. That means there

should be 26 doublings, for 0.234 trillion transistors. The MI300A was

released in Dec 2023, so Moore’s Law is in the right range if we give it a

2 year doubling period, right?

Wait, can you buy an MI300A for $906 (the inflation adjusted price of the

Intel 8008)? No, not even close. It’s in the “if you have to ask, you can’t

afford it” range. Reports put it around $10k to $15k each.

So Moore’s Law is dead because you can’t buy a 200B transistor device for

around $1000. Not even close.

All that said, Moore’s original paper is quite remarkable. He made an

extrapolation that held on for a decade more, and then held on for a few

more decades after a little revision. He’s also looking ahead to how this

would affect everything from radar to putting computers in the home and cars.

It was a remarkable prognostication.

2024-03-19

After dabbling with Gemini, I don’t think I’ll bother anymore. You can

ultimately accomplish its goals with plain HTTP if you avoid cookies or pixel

trackers or JavaScript. Which this blog now does.

It also forced links to the end of the document and other formatting

conventions.

I took the export of the old WordPress blog and parsed it into Markdown,

and used that as the basis for statically generating everything. A bunch of

embedded YouTube iframes were converted to links. Those were the only

source of JavaScript or cookies left on the site, and they’re all gone now.

Could do something fancy later like taking the thumbnails, but this is fine for

now.

I’m interested in how far static site generators could go. When we have

servers with a terrabyte of RAM and flash storage in tens or even hundreds

of terrabytes, why not use that space to dramatically speed up response time

to the client? Can you have a static site CMS that’s on par with WordPress?

2022-06-06

Let’s start with a moderately simple regex:

/\A(?:\d{5})(?:-(?:\d{4}))?\z/

Some of you might be smacking your forehead at the thought of this being “simple”, but bear with me. Let’s break down what it does piece by piece. Match five digits, then optionally, a dash followed by four digits. All in non-capturing groups, and anchor to the beginning and end of the line. That tells you what it does, but not what it’s for.

Explaining out the details in plain English, as in the above paragraph, doesn’t help anyone understand what it’s for. What you can do to help is have good variable naming and commenting, such as:

# Matches US zip codes with optional extensions

my $us_zip_code_re = qr/\A(?:\d{5})(?:-(?:\d{4}))?\z/;

Like any other code, we hand off contextual clues about its purpose using variable naming and comments. In Perl, qr// gives you a precompiled regex that you can carry around and match like:

if( $incoming_data =~ $us_zip_code_re ) { ... }

Which some languages handle by having a Regex object that you can put in a variable.

There are various proposals for improved syntax, but a different syntax wouldn’t help with this more essential complexity. It could help with readability overall. Except that Perl implemented a feature for that a long time ago that doesn’t so drastically change the approach: the /x modifier. It lets you put in whitespace and comments, which means you can indent things:

my $us_zip_code_re = qr/\A

(?:

\d{5} # First five digits required

)

(?:

# Dash and next four digits are optional

-

(?:

\d{4}

)

)?

\z/x;

Which admittedly still isn’t perfect, but gives you hope of being maintainable. Your eyes don’t immediately get lost in the punctuation.

I’ve used arrays and join() to implement a similar style in other languages, but it isn’t quite the same:

let us_zip_code_re = [

"\A",

"(?:",

"\d{5}", // First five digits required

")",

"(?:",

// Dash and next four digits are optional

"-",

"(?:",

"\d{4}",

")",

")?",

].join( '' );

Which helps, but autoidenting text editors try to be smart with it and fail. Perl having the // syntax for regexes also means editors can handle syntax highlighting inside the regex, which doesn’t work when it’s just a bunch of strings.

More languages should implement the /x modifier.

2022-05-05

I haven’t updated the blog in a while, and I’m also rethinking the use of WordPress. So I decided to dump the old posts, and convert it to gemtext, the Gemini version of markdown.

https://gemini.circumlunar.space/

The blog will be hosted on Gemini, as well as static HTTP.

Not all the old posts converted cleanly. A lot of the code examples don’t come through with gemtext’s preformatted blocks. Some embedded YouTube vids need their iframes converted to links. Comments are all tossed, which is no big loss since 95% of them were spam, anyway.

2021-02-08

https://www.youtube.com/watch?v=DcEMNovvb1s

2021-01-01

People often misunderstand the speed ratings of SD cards. They are built cheaply, and historically have primarily targeted digital cameras. That meant they emphasized sequential IO performance, because that’s how pictures and video are handled. When we plug an SD card into a Raspberry Pi, however, most of our applications will use random reads and writes, which is often abysmal on these cards.

Enter the A1 and A2 speed ratings. These were created by the SD Card Association to guarantee a minimum random IO performance, which is exactly what we want for single board computers.

There’s been a blog post floating around about how the A2 class is marketing BS. In some cases, I’ve seen this cited as evidence of the A1 class also being marketing BS. After all, the top cards on the chart for random IO, the Samsung Evo+ and Pro+, don’t have any A class marks on them.

This misunderstands what these marks are for and how companies make their cards. Your card has to meet a minimum speed to have a given mark. You are free to exceed it. It just so happens that Samsung makes some really good cards and have yet to apply for the mark on them.

Samsung could change the underlying parts to one that still meets its certification marks for the model, but with far worse random IO performance. I don’t think Samsung would do that, but they would technically be within their rights to do so. This kind of thing has happened in the storage industry before. Just this past year, Western Digital put Shingled Magnetic Recording on NAS drives (SMR is a hard drive tech that craters random write performance, making them completely unsuitable for NAS usage). XPG swapped out the controller on the SX8200 Pro to a cheaper, slower one invaliding the praiseworthy reviews they got at launch.

Even if Samsung wouldn’t do that, it’s still something where you have to go out of your way to find the best SD card. Well-informed consumers will do that, but the Raspberry Pi serves a broad market. Remember, its original purpose was education. You can’t expect people to even know what to research when they’re just starting out. It also doesn’t help that major review sites don’t touch on SD cards. Benchmarking can be a tricky thing to do right, and most hobbyist bloggers don’t have the resources to do a good, controlled test, even if they mean well.

What the A class marks do is give a clear indication of meeting a certain minimum standard, at least in theory. Independent reviews are always good in order to keep everyone honest, but if you don’t have time to look at them, you can grab an A1 card and put it in your Pi and you’ll be fine.

As the blog post noted above states, A2 cards don’t always live up to their specs. According to a followup post, this appears to be due to things the OS kernel needs to support, rather than the card itself. It’s also possible for a company to try to pull a fast one, where they launch with a card that meets spec, and then quietly change it. However, if they do, they don’t just have consumer backlash to contend with, but the SD Card Association lawyers. Since they hold the trademark on the A class marks, they have the right to sue someone who is misusing it.

Marketing isn’t just about making people into mindless consumers. It can also be about conveying correct information about your product. That’s what the A classes are intended to do. Nobody knew that Samsung Evo and Pro cards were good until somebody tested them independently. With the A class marks, we have at least some kind of promise backed up with legal implications for breaking it.

2020-10-28

I slapped GStreamer1 together some years ago using the introspection bindings in Glib. Basically, you point Glib to the right file for the bindings, and it does most of the linking to the C library for you. They worked well enough for my project, so I put them up on CPAN. They cover gstreamer 1.0, as opposed to the GStreamer module, which covers 0.10 (which is a deprecated version).

It has an official Debian repository now, but I was informed recently that it would be removed if development continued to be inactive. Besides having enough time, I also don’t feel totally qualified to keep it under my name. I don’t know Gtk all that well, and don’t have any other reason to do so anytime soon. I was just the guy who made some minimal effort and threw it up on CPAN.

I’ve found GStreamer to be a handy way to access the camera data in Perl on the Raspberry Pi. It’s a bit of a dependency nightmare, and keeping it in the Debian apt-get repository makes installation a lot easier and faster.

So it’s time to pass it on. As I mentioned, they’re mostly introspection bindings at this point. It would be good to add a few custom bindings for things the introspection bindings don’t cover (enums, I think), which would round out the whole feature set.

2020-09-07

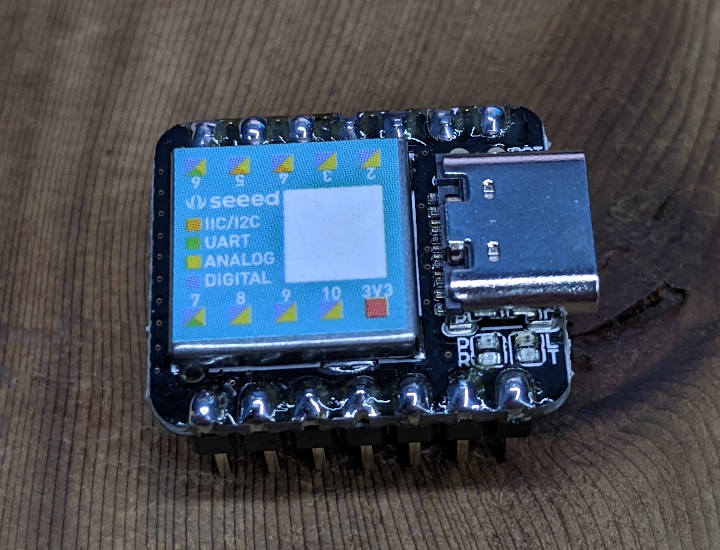

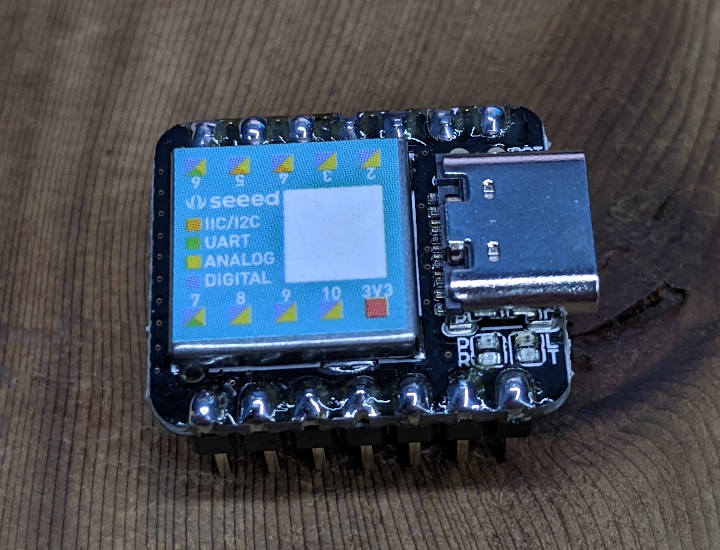

Seeed has been picking up in the maker community as a source of low cost alternatives to popular development boards. The Rock Pi S is an excellent competitor to the Raspberry Pi Zero, providing the quad core processor that the Pi Zero lacks. They have also released the Seeed XIAO, a small ARM-based microcontroller competing with Arduino or Teensy boards, and sent me units for this review.

The board is small but capable, with an ARM Cortex M0+ 32-bit processor running at 48MHz, 256KB of flash, and 32KB of SRAM. Programming is done through a USB-C port, which also powers the device. There are 14 pins, including one 5V output, one 3.3V output, and one ground. The rest are I/O pins, all of which are capable of digital GPIO, analog input, and PWM output. There’s also a single DAC channel, plus I2C, SPI, and UART. Also included is a set of stickers that fit on the board’s main chip and shows the basic pinout of the main 10 pins.

Shipping came directly from China, and took several months. I’m sure at least some of the delay was likely due to Coronavirus issues. Still, this isn’t the first time I’ve seen Seeed be slow to ship. If you’re interested in this product, I suggest ordering in advance.

Once it arrived, the board was easy to setup. Pin headers come in the package, but will need to be soldered. Their wiki page for the board was easy to follow, especially if you have previous experience with Arduino-based boards. Programming is done through the Arduino IDE, using a plugin that provides the right compiler environment and firmware flasher. Many new boards are preferring the free version of Microsoft Visual Studio with PlatformIO, but Seeed is choosing to stick with Arduino’s IDE for this one.

The board can be tricky to get into programming mode. When uploading code, I often encountered an error like this in the IDE console (on a Windows machine):

processing.app.debug.RunnerException ... Caused by: processing.app.SerialException: Error touching serial port 'COM5'. ... Caused by: jssc.SerialPortException: Port name - COM5; Method name - openPort(); Exception type - Port busy.

Fixing this is done by double tapping the reset pad with a wire over to the pad right next to it. When it goes into bootloader mode correctly, Windows would often pop up a file manager for the device, as if a USB flash drive had been connected. This may also change the COM port, so be sure to check that before trying to upload again.

With that issue sorted, I uploaded the basic LED blinker example from Arduino, and it worked fine against the LED on the board itself. That shows the environment was sane and working.

I next turned my attention to a project I’ve had sitting on my shelf for a while: modifying an old fashioned oil lantern for a Neopixel. I received this lamp from a secret santa exchange party last year, and was told that while it had all the functional parts, it wasn’t designed to take the heat of a real burning wick. So I picked up Adafruit’s 7 Neopixel jewel, and combined it with NeoCandle, which would give a slight flickering effect. NeoCandle was designed for 8 Neopixels rather than 7, but commenting out the call to the eighth pixel worked fine.

Complicated libraries like this are often made for Arduino boards first, and everything else second, so I was bracing myself for a long debugging session to figure out compatibility. My worry was unnecessary, as the Adafruit Neopixel library worked without a hitch.

(White balance on my phone camera wasn’t cooperating. The color of the light is more orange in person. It also has a very subtle flicker effect.)

At $4.90 each, this is a nice little board with a good feature set. It’s cheaper than the Teensy LC, and in terms of processing power, more capable than Arduino Minis. Getting the binary uploaded can be janky, but to be fair, that’s common on a lot of ARM-based microcontrollers. When a project doesn’t need a lot of output pins, I would use this in place of a Teensy LC. Just watch for those shipping times.

2020-05-11

If you ask for advice on how to store passwords, you’ll get some responses that are sensible enough. Don’t roll your own crypto. Salted hashes aren’t good enough against GPU attacks. Use a function with a configurable parameter that causes exponential growth in computation, like bcrypt. If the posters are real go-getters that day, they might even bring up timing attacks.

This is fine advice as far as it goes, but I think it’s missing a big picture item: the advice has changed a number of times over the years, and we’re probably not at the final answer yet. When people see the current standard advice, they tend to write systems that can’t be easily changed. This is part of the reason why you still have companies using crypt() or unsalted MD5 for their passwords, approaches that are two or three generations of advice out of date. Someone has to flag it and then spend the effort needed for a migration. Having run such a migration myself, I’ve seen it reveal some thorny internal issues along the way.

Consider these situations:

-

You run bcrypt. Is your cost parameter sufficient against attacks on current hardware? Can your servers afford to push it a little higher? What’s your process for changing the cost parameter? Do you check in every year to see if it’s still sufficient? If you changed it, would only new users receive the upgraded security, or does it upgrade users every time they login?

-

You run scrypt. Tomorrow, news comes out showing that scrypt is irrevocably broken. What’s your process for switching to something different? As above, would it only work for new users, or do old users get upgraded when they login?

-

Your system is just plain out of date, running

crypt() or salted SHA1 or some such. How do you migrate to something else?

Castellated is an approach for Node.js, written in Typescript, that allows password storage to be easily migrated to new methods. Out of the box, it supports encoding in argon2, bcrypt, scrypt, and plaintext (which is there mostly to assist testing). A password encoded this way will look something like this:

ca571e-v1-bcrypt-10-$2b$10$wOWIkiks.tbbftwkJ81BNeuOtq631SzbsVOO7VAHf5ziH.edAAqJi

This stores the encryption type, its parameters, and the encoded string. We pull this string out of a database, check that the user’s password matches, and then see if the encoding is the preferred type. Arguments (such as the cost parameter) are also checked. If these factors don’t match up, then we reencode the password with the new preferred types and store the result. This means that every time a user logs in (meaning we have the plaintext password available to us), the system automatically switches over to the preferred type.

There is also a fallback method which will help in migrating existing systems.

All matching is done using a linear-time algorithm to prevent timing attacks.

It’s all up on npm now.

Next →

Copyright © 2024 Timm Murray

CC BY-NC

Opinions expressed are solely my own and do not express the views or opinions

of my employer.